Éclairages

Beyond the Proof-of-Concept: DH / AI Methods in the Real World

Par

Publié le

19 juin 2022

, modifié le

19 juin 2022

Image

On Tuesday, May 10th, for the DHAI seminar, Benoit Seguin tracked the benefits and obstacles to the use of computational methods in digital humanities through his lecture entitled Beyond the Proof-of-Concept - DHAI Methods in the Real World. In the space of Digital Humanities, Computational Methods are always used in an applied setting, driven by research questions. However, converting these research results into useful tools for researchers unfortunately rarely happens. By taking a step back on different projects he took part in as an independent engineer (large photo-archives analysis, art market price estimation, visualization of text-reuse in architecture treatises, ...). During the lecture he tried to highlight the divide between research interest and end-user interest, and came up with advice on integrating modern ML in DH projects.

The coupling Digital Humanities / AI

Firstly, Benoit Seguin introduced the underlying limits of mass digitization’s benefits for Digital Humanities. The premises from mass digitization are huge as it could help building tools to analyze very large sets of data but these premises are hard to implement in practice. In fact, according to Benoit Seguin it is hard conceptually to efficiently connect the intent of the research and the computer science tools.

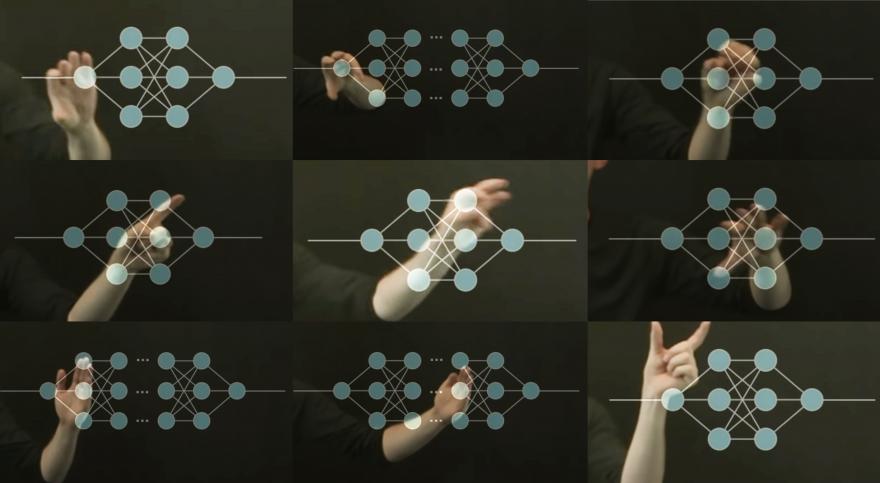

Benoit Seguin describes this very key phase of the research during which the research’s intent has to be adapted or converted into computer science tools. The first concept that he develops is : operationalization, that can be defined as : “the conversion of a concept to a precisely defined structure, maybe through measurements”. As a matter of fact, the first limit in building tools for Digital Humanities lies in the fact that not all research tasks nor concepts are currently operationalizable. The research tasks that are currently operationalizable are the tasks that can benefit from larger scale thanks to mass digitization and don’t require any human intervention. Many researchers in computer science try to expand the number of research tasks that can be operationalizable, searching to build more tools, without focusing on how this operationalization can be relevant to work on a specific research problem in humanities.

Benoit Seguin suggested another approach to better connect research needs and computer science tools. It consists in decomposing the original problem into operationalizable sub-tasks and work towards a step by step approach to build relevant tools for researchers :

The process would follow this path :

- Take a research question

- Find/define one or multiple smaller operationalizable sub-questions useful for solving the initial one

- Process the sub-questions

- Gather the results together in a quantitative/qualitative analysis, maybe a visualization, ect…

This path is not linear and involves a navigation between these different steps to get more relevant and accurate results. It is after finding the sub-spaces that it is possible to know what to operationalize and process in order to find the answers to the problems.

The degree of operationalizability of research tasks evolves rapidly with technology. Benoit Seguin mentioned his 2 days / 2 years rule explaining that if the available performing methods are not satisfactory enough, it is better to come back to the same task or problem two years later when general computational progress has been made.

Image

Case studies

Getty Research Institute

After this introduction, he presented various case studies to illustrate the previous arguments. The first research case study traces back a photo archives analysis project for the Getty Research Institute. The research task was to extract/index/analyze/make searchable a large set of digitized documents including printed text, handwritten documents, stamps.

For this project, he followed his sub-tasks decomposing method. The first sub-task was to apply photo extraction in a semi-supervised way. The second sub-task was stamp detection and identification in a semi-supervised way. This one required a stamps database. The third one was image classification. A subtask that was less easy to operationalize as it presents a variable degree of operationalizibility according to the vocabulary (for example making distinctions between images that are biblical or mythological). To sort this issue, extracted photographs can then be visually indexed to be searchable or matched for duplicates.

He summed up the lessons learned from this sub-tasks decompositions orchestrated for this research.

- Complex tasks can be decomposed into much easier ones. In practice it is much more engineering work rather than research work to implement a proper workflow.

- Semi-supervised approaches require more complex interfaces and processes which involve additional engineering work.

ETH Library

He then mentioned a second research project for the ETH library which focused on Text-reuse in Architecture books. Tracing text-reuse is important for architectural history as it enables researchers to work on the recurrence of particular themes, imitations of writers and elements of pre-1850 writing practices in order to understand the circulation and transformation of ideas, communities of knowledge. The analyzed corpus consisted of more than 1300 titles from the rare books collection of the ETH Library with a focus on Architecture.

The limit for this research project was that computers do not have an understanding of meaning. The sub tasks that can be achieved are textual correlation and efficient visualization. The process firstly consists in the detection of similarities of wards and characters that can be done on large corpus. This method generated a list of connections between books and enabled the production of a graph structure to visually explore the recurrence of writings.

The workflow could be described this way :

- Gather metadata and perform OCR.

- Text-Reuse detection thanks to passim tool from David A. Smith so it can analyze a large corpus. This tool showed that 40% of the books are connected to at least to another book.

- Make the results explorables and build a powerful visualization tool.

This visualization involved a multi-layered structure with different focus points : global view (overview of book connections), middle-level view (like searching for a key-word), close view (where are the repeated passages located in the books and exploring the inside of the books with pictures).

Benoit Seguin explained that many lessons could be learned from this case study. Firstly, a simple working method with high precision is often enough and doesn’t require word embedding and machine learning. Also, the project started as a generic textual/visual explorer, the text-reuse was an added experiment that stuck and was very appropriate. It was hard to predict if the visualization method would work or not. For example the middle-level one was a success but the time reordering feature in the graph view was a failure. Making beautiful and useful visualization interfaces is much more work in the end than processing data and this has to be taken into the timeline before the research starts. The next step for this project will consist in having a full-working version of the visualization interface.

Image

Predicting the art market

The final case study presented during the lecture was the research on art market’s prediction in collaboration with Pierre-Alexis Voisin and Victor Raimbaud.

The process first consisted in a lot of data acquisition from public auction, gallery prices and financial information that could be extracted. The second step consisted in training a model to predict art prices (estimated, realized, gallery) but also predict uncertainty. The visual results of the model prediction included some explainables for the price variation, for example a reference to a specific auction.

The general results from the model were better than the expert estimates from auction houses. The issue was that it was very difficult to regularize the model with a lot of noise problems. As a matter of fact a lot of data was missing. The general lesson for this project was that data acquisition and normalization was the most difficult part of the process. The process could be improved by adding more data, improving pre-processing.

Image

To conclude, Benoit Seguin reminded us that Digital Humanities should care about flexible and efficient methods rather than novel models or super powerful methods. In practice, Digital Humanities expect tools and products and frameworks to tackle a large number of situations with minimal resources. The difficulties and solutions encountered during the case studies perfectly illustrate his point as many complicated options were given up and basic computational tools revealed themselves as perfectly appropriate to answer the researcher’s intention.

Biography

Image

BenoitSeguin is an independentEngineerspecialized in the applications of Machine Learning, especially to thedomain of Cultural Heritage. He was a PhDstudent at the Digital HumanitiesLaboratory (DHLAB) at EPFL. Histhesiswasbased on theReplicaProjectwhich he used machine learningandmodern image processing to help Art Historiansnavigate very large iconographic collections. Sincethen, he publishedmanyresearch articles and gave lectures about thistopic. Sincethen, he hasbeenworking on multiple projectsapplyinghistechnical expertise to researchprojectssuch as theGettyResearchInstitute, thedevelopment of an installation for Artlab, a Graph - TextReusePlatform for the ETH Libraryand more.

Bibliography

- M. Gabrani, B. Seguin, H. Saab Estimating pattern sensitivity to the printing process for varying dose/focus conditions for RET development in the sub-22nm era, in Metrology, Inspection, and Process Control for Microlithography XXVIII, 2014

- I. diLenardo, B. Seguin, F. Kaplan Visual Patterns Discovery in Large Databases of Paintings, in Digital Humanities Conference 2016, Krakow

- B. Seguin, C. Striolo, I. diLenardo, F. Kaplan Visual Link Retrieval in a Database of Paintings, in VISART Workshop at European Conference of Computer Vision 2016, Amsterdam.

- B. Seguin, I. diLenardo, F. Kaplan Tracking Transmission of Details in Paintings, in Digital Humanities Conference 2017, Montréal.

- W. Haaswijk*, E. Collins*, B. Seguin*, M. Soeken, S. Süsstrunk, F. Kaplan, S. De Micheli Deep Learning for Logic Optimization, in International Workshop on Logic & Synthesis 2017.

- B. Seguin The Replica Project: Building a visual search engine for art historians, in ACM XROADS Magazine Spring 2018.

- B. Seguin, L. Costiner, I. diLenardo, F. Kaplan New Techniques for the Digitization of Art Historical Photographic Archives—the Case of the Cini Foundation in Venice, in Archiving 2018, Washington DC.

- B. Seguin, L. Costiner, I. diLenardo, F. Kaplan Extracting and Aligning Artist Names in Digitized Art Historical Archives, in Digital Humanities Conference 2018, Mexico.

- W. Haaswijk*, E. Collins*, B. Seguin*, M. Soeken, S. Süsstrunk, F. Kaplan, S. De Micheli Deep Learning for Logic Optimization Algorithms, in International Symposium on Circuits and Systems 2018.

- S. Ares Oliveira*, B. Seguin*, F. Kaplan dhSegment: A generic deep-learning approach for document segmentation, in International Conference on Frontiers in Handwriting Recognition 2018, Niagara Falls.

- B. Seguin Making large art historical photo archives searchable, EPFL PhD Thesis 2018.

Pour laisser un avis sur ce contenu, connectez-vous ou rejoignez-nous.

Se connecterS'inscrire